As you may know, each chat model has a different context length limit:

- GPT-4o: 128,000 tokens

- Claude 3.5 Sonnet: 200,000 tokens

- Gemini 1.5 Pro: up to 2M tokens

Learn more about tokens here

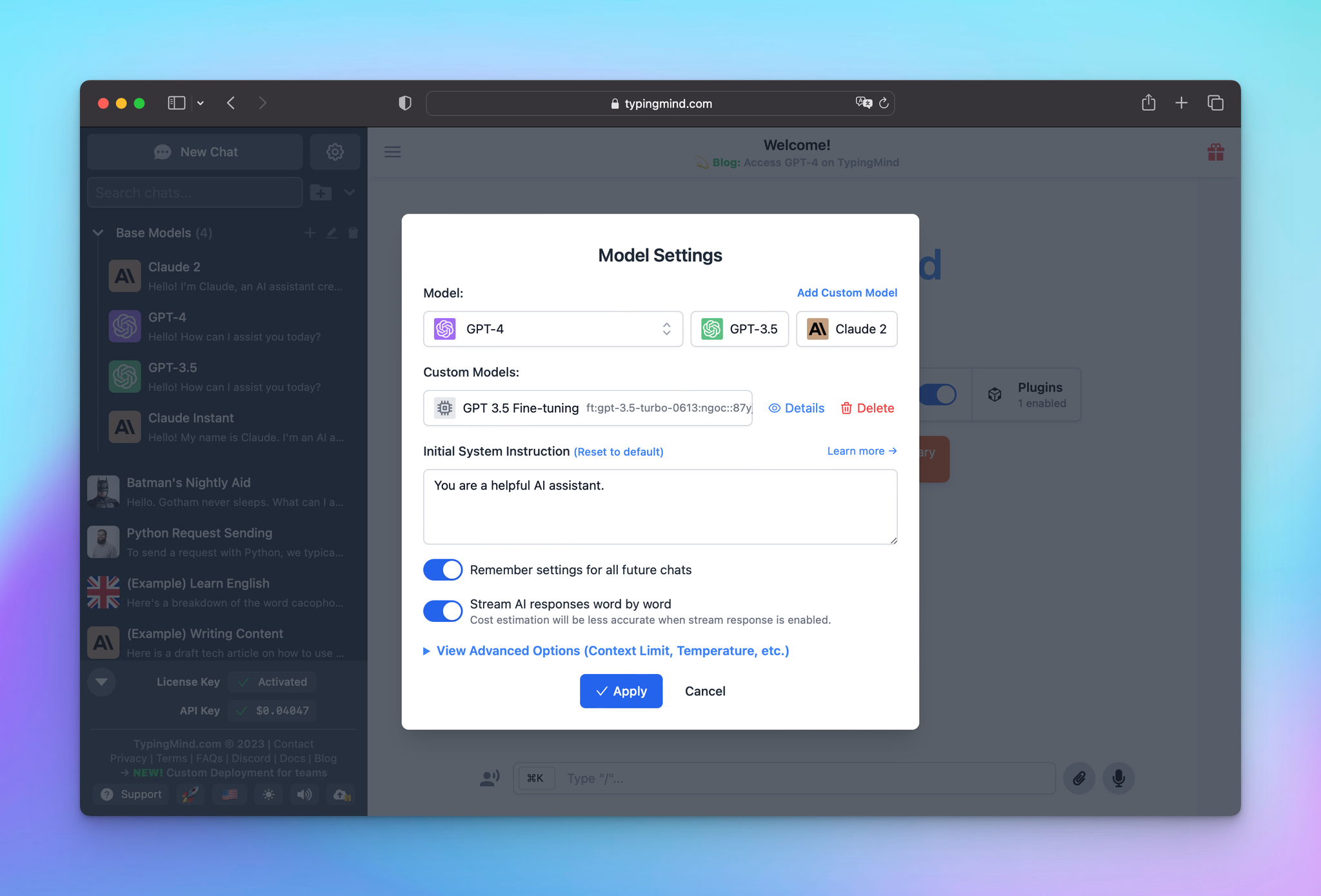

What happen when you reached context length limit on TypingMind?Make use of the context limit on TypingMind

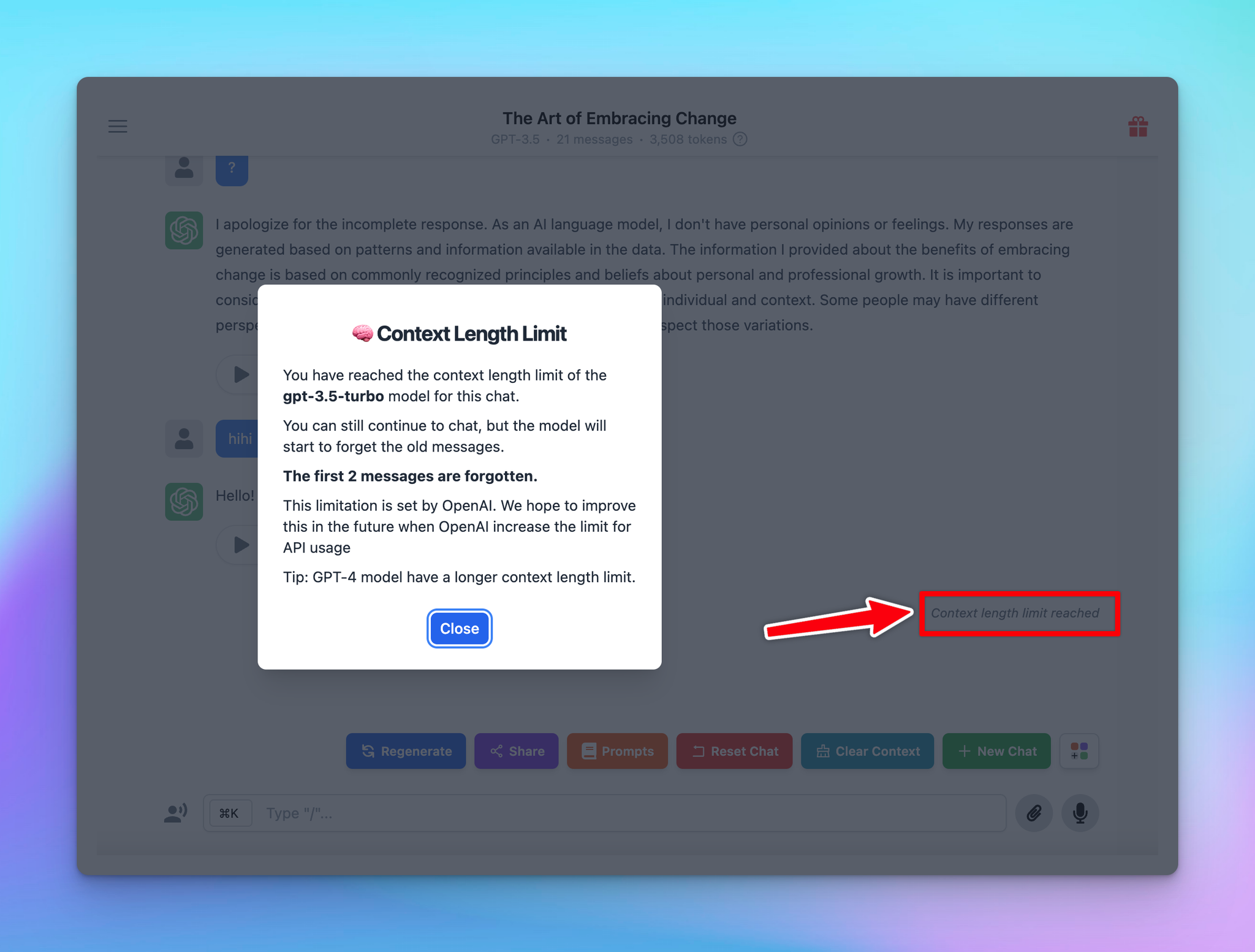

What happen when you reached context length limit on TypingMind?

When the limit of context length is reached in a TypingMind conversation:

- An alert that says “Context length limit reached” will appear

- And you can still continue chatting with the AI assistant. However, the system will automatically forget a specific number of earliest text messages to allow room for new content that you input.

Please note that the AI model will continuously preserve the system message that you set up via the initial system instruction or AI Character

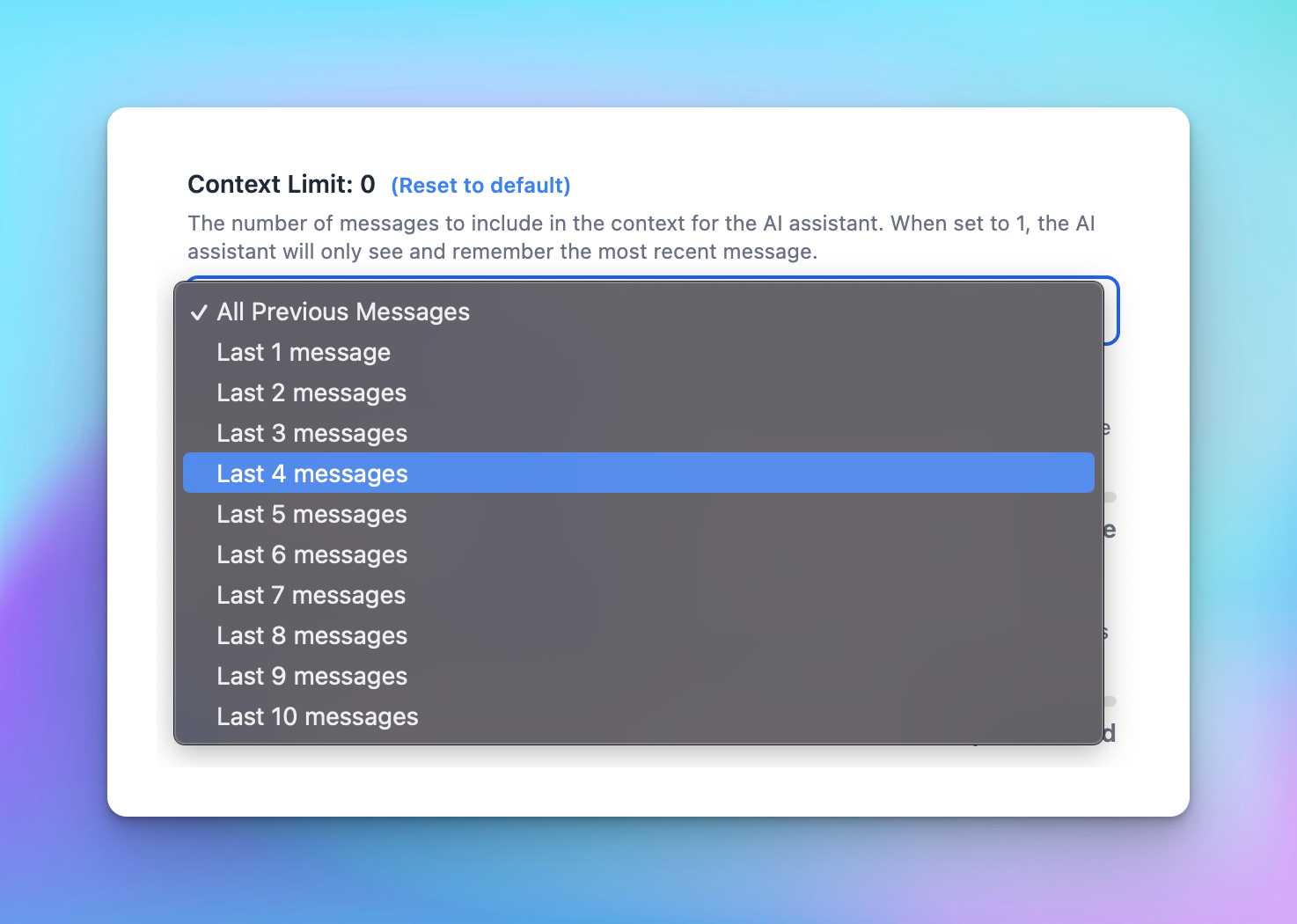

Make use of the context limit on TypingMind

Using context limit enables the AI model to remember only a certain number of recent messages.

So, if your conversation history isn't that crucial, you can limit the AI's memory to only the x number of the latest messages.

With that being said, the AI can still remember and give answers as you guide it in the system instruction. It just forgets your previous messages.